Bringing AI CoPilot to life

The context:

This was an exciting project: shaping Contentsquare’s first AI chat initiative. It was a fantastic opportunity to get involved early and help lay the groundwork for what later became a major company priority.

My role spanned multiple areas of responsibility—from shaping the project’s scope and contributing to discovery and early design, to establishing content design best practices for AI and validating the very first chat iteration with customers.

The team:

Product director, Product designer, Engineering lead, Data scientist, Data engineer, Content designer (me)

Phase 1: Discovery and scope

Ideas and sketeches from the workshops that we ran

The way we approached designing the flow was through a series of co-design sessions, where the Product Designer and I created several iterations of each screen, and then reviewed them with the rest of the team.

Content Design played a particularly important role in shaping the chat prompts—both in deciding which prompts to surface, and in ensuring their wording is clear and natural.

We then ran an unmoderated usability test with the first iteration of the flow. Following this we made changes based on the feedback we received, as well as the team’s feedback. We then launched a second unmoderated test.

Phase 3: Patterns and consistency

Meanwhile, we ran a survey with Contentsquare customers to understand the types of queries they might ask the AI chatbot. The survey also doubled as a way to recruit participants for later usability testing of the first CoPilot prototype.

The survey revealed which resources on Contentsquare customers found most useful and, just as importantly, how they preferred to see those resources summarized. These insights were invaluable as they directly informed how we shaped the response structure of the AI tool.

Using this input, we formulated a set of sample questions to test with the tool the Engineering team was developing. By analyzing the responses, we were able to recommend improvements to both structure and tone, helping the AI deliver answers in a way that felt clearer, more concise, and user-friendly.

Once the Engineering team had made significant progress, we began testing the tool on the staging environment. This enabled us to put our customer hats on and provide feedback on improvements we could make. One of these were patterns we created for how the AI’s answers should be structured.

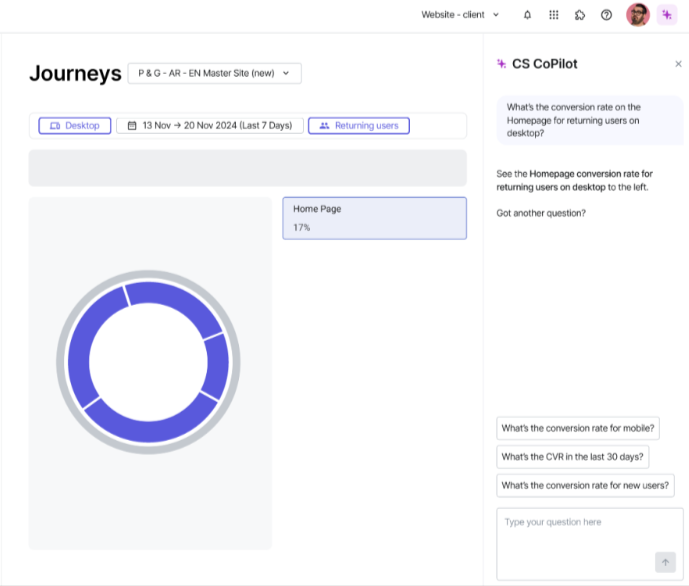

Building on the earlier survey insights, we also designed suggested prompts within the AI side panel to encourage customers to keep the conversation going.

We knew that this is only the beginning of leveraging AI tools, so we saw the need for a centralized place with content patterns that could be applied across all of the company’s AI tools, not just the CoPilot. I created guidelines for structuring different types of responses the AI could give, e.g. generic answers, requests for more information, loading states, redirections, or cases where no answer could be generated. Alongside the patterns, I provided example copy. This way, we’d create consistency across all AI tools to come.

The project started as a Hackathon idea and was heavily inspired by the AI CoPilot developed by Heap, which Contentsquare had recently aquired. The role of the AI CoPilot is to help users find answers to their analytics questions faster and easier when using the platform.

It had the potential to address critical user pain points—particularly helping new users onboard faster and uncover insights more easily. Both were challenges with a direct impact on adoption and retention.

I got involved quite eary on which meant that I was able to be involved in the discovery phase. I collaborated very closely with the Product Designer leading the project and a Product Director to define:

Business goal

User needs, profiles, and pain points

Potential risks

Hypothesis.

This was one of those rare occasions where business objectives aligned almost perfectly with the user needs, which made defining our hypothesis that much more straightforward.

Our assumption was that if the AI chat feature could help users onboard faster and get value more quickly, it would in turn drive adoption, engagement, and retention.

Additionally, we deep dived into Heap’s CoPilot. Their work served as a foundation, but our goal was not just to replicate it—we wanted to understand its mechanics and identify opportunities to improve the quality and relevance of answers it provided to users.

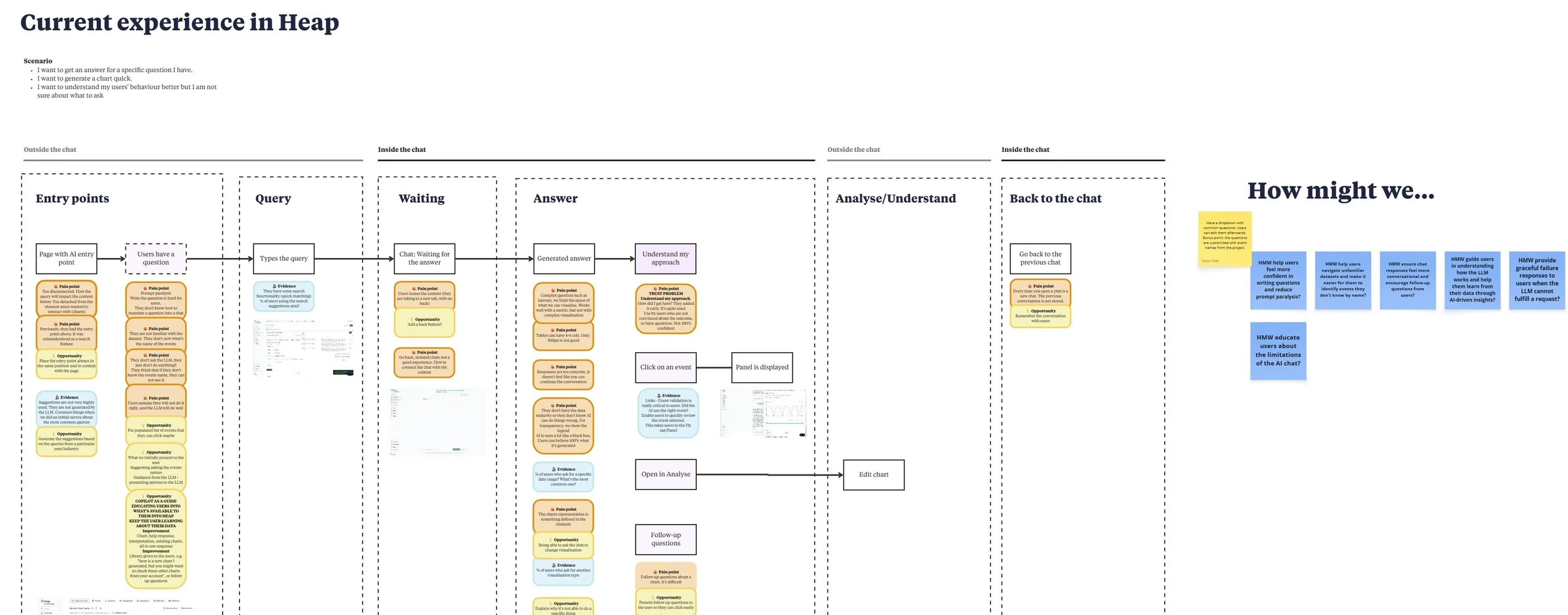

Mapping the experience of Heap’s AI CoPilot, and brainstorming how we might built on it for Contentsquare’s CoPilot

Showcasing the value of Content Design

Our deep dive into Heap’s AI tool inspired me to re-design some of its most frequently used replies. I focused on making the outputs more helpful, trustworthy, and aligned with our vision for the product. The goal was to demonstrate to Product and Engineering what “good” could look like, especially since building trust and accuracy was critical for adoption. We knew that many customers would approach the AI assistant with caution at first, so reliability had to be front and center.

Because the teams working on the AI CoPilot were new to Content Design, I also began documenting my contributions in a diary. This not only helped track my collaboration with the Product Designer but also served as a practical way to highlight the broader value of Content Design—showing that it’s not just about writing copy, but about shaping a seamless, credible user experience.

Phase 2: Alignment and design

With the foundations in place, we started aligning with key stakeholders—specifically the product teams whose areas would be most affected by the CoPilot. The aim was to validate, refine, and, where necessary, challenge our discovery outcomes.

We facilitated two workshops with these teams to define entry points for the AI chat and map the overall flow. Together, we brainstormed and sketched potential solutions, then voted on the strongest ideas. The winning elements from each sketch were combined and carried forward into the design process.

The initial designs were therefore rooted in these ideation workshops, making the process genuinely collaborative and ensuring that multiple perspectives shaped the outcome.

Creating patterns for AI tools to ensure consistency and a smoother customer experience

The final step in my involvement with this project was conducting usability testing with selected customers. We tested the first workable iteration of the AI CoPilot, alongside a couple of other AI tools in development.

The feedback proved especially valuable for the Engineering team, as it revealed how the tool performed in something close to a real-world environment. On the design side, we were able to validate two key aspects: how easily customers could discover the feature and how much they trusted it.

After completing the interviews, we held a workshop to synthesize the feedback and define next steps. From a usability perspective, the priorities included improving the overall experience by giving users more control, increasing visibility into system status, and making interactions smoother and more efficient.

First iteration of the AI CoPilot that we tested with customers

The result

Although I wasn’t involved long enough to see the CoPilot released, the experience was incredibly valuable. My biggest takeaway was the level of involvement and impact I was able to have as a Content designer. Because this was my first AI project, it was eye-opening to see just how fundamental content is to shaping an AI tool, and the user experience around it. Being able to lay the foundations, demonstrate the value of Content design, and help set patterns for future AI initiatives was not only rewarding but also a true career highlight.

More importantly, this project reaffirmed what excites me most: stepping into unexplored areas, bringing clarity to complexity, and showing how thoughtful content can make new technology feel intuitive and trustworthy.